Over the last two years, a quiet revolution has taken hold in how software gets built. Large frontier models from major AI labs have matured to a point where they do not just assist with coding. They reshape the entire lifecycle of how software moves from idea to production. And with this shift comes a question that few enterprise technology leaders are asking loudly enough: if anyone can now generate code, who is responsible for making sure it is the right code?

I have been thinking about this through an unusual lens. The structural principle that democratic societies use to prevent unchecked power applies remarkably well to AI-driven development. Legislative, Executive, Judicial. Specification, Code Generation, Quality Review. Three branches, each essential, none sufficient alone.

The Democratization Nobody Expected

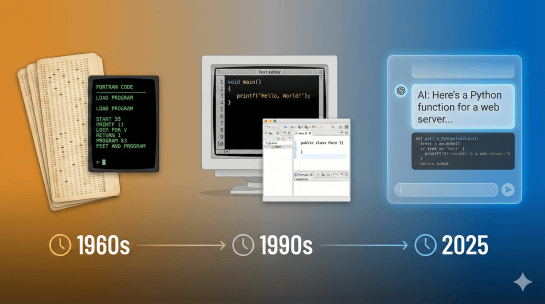

For decades, writing software was a craft reserved for those who understood syntax, compilers, and the particular pain of a misplaced semicolon. Today, state-of-the-art foundation models can generate entire modules from a natural language description. A product manager can describe a feature and get working code within minutes. A domain expert with no programming background can prototype a data pipeline overnight.

This is genuine democratization. But democratization without structure leads to chaos. When everyone can generate code but nobody owns the specification or validates the output, you get “vibe coding” — throwing prompts at an AI and hoping for the best. It works for demos. It fails for production systems.

What the industry needs is not less AI involvement. It needs governance. It needs separation of concerns at the process level.

The Legislative Branch: Specification as Law

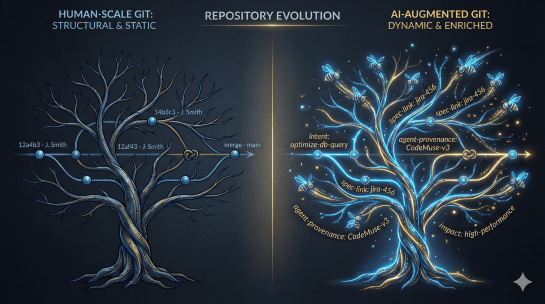

In a functioning democracy, the legislature writes the laws. In spec-driven development, the specification is the law. It defines intent, constraints, and architecture decisions before a single line of code is generated.

This is exactly what frameworks like Spec Kit have formalized. The open-source toolkit treats specifications not as disposable documentation that rots the moment code is written, but as living, executable artifacts. Commands like /specify, /plan, and /tasks structure the workflow around intent first, implementation second. Code serves specifications, not the other way around.

The BMAD Method takes this further with its multi-agent approach. Specialized AI agents — an Analyst, a Product Manager, an Architect — collaborate to produce comprehensive requirement documents and architecture specifications before any development agent touches the codebase. The “Agentic Planning” phase is essentially a legislative process: multiple perspectives debating and refining the rules that govern implementation.

The human role here is critical. You are the lawmaker. Advanced reasoning systems help you articulate requirements and stress-test architecture decisions. But the intent, the business logic, the “why” — that remains yours.

The Executive Branch: Code Generation as Implementation

The executive branch implements laws, it does not write them. In our metaphor, this is where advanced coding systems from major AI labs do their most visible work. Given a well-defined specification, new generation reasoning systems produce code with remarkable speed and consistency.

BMAD’s “Context-Engineered Development” phase illustrates this well. The Scrum Master agent breaks the specification into hyper-detailed story files containing full architectural context, implementation guidelines, and testing criteria. The development agent works from these self-contained packages — no context collapse, no re-explaining requirements.

Spec Kit follows a similar philosophy. The specification constrains the generation. Security requirements and compliance rules are baked into the spec from day one, not bolted on after.

The efficiency gain is real. But so is the risk. An executive branch without checks becomes authoritarian. Code generation without validation becomes technical debt at machine speed.

The Judicial Branch: Quality Review as Constitutional Court

The judiciary reviews whether the executive acted within the law. In software, this is the quality gate — code review, testing, validation, compliance checking. This is where current AI-driven development is weakest.

Too many teams generate code with frontier models and then skip meaningful review because the output “looks right.” This is the equivalent of a government without courts. Both BMAD and Spec Kit recognize this gap. BMAD includes rigorous pull-request reviews where humans and AI agents inspect generated artifacts, creating a “continuous compliance ledger” — an auditable trail from requirement to deployment. Spec Kit provides an /analyze command that acts as a quality gate, checking internal consistency of specs and plans.

But tooling alone is insufficient. A model can tell you whether code compiles and passes tests. It cannot tell you whether the code solves the right problem for the right user in the right regulatory context. Validation, critical reasoning, architectural thinking — these are not nice-to-have skills. They are the judiciary of your development process.

Beyond Code: Agents, Decisions, and the Vanishing Middle Layer

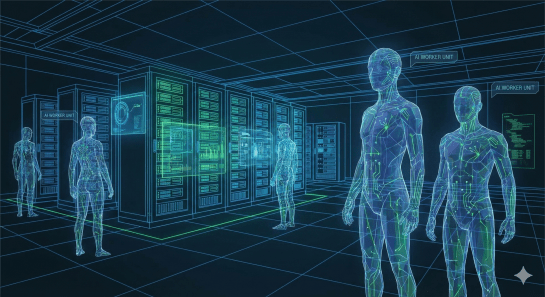

This separation of powers extends beyond code. Across the enterprise, AI agents are replacing traditional applications. Instead of building a reporting dashboard, you configure an agent workflow that queries data and delivers insights. Instead of a project management tool with seventeen tabs, you define the outcome and let orchestrated agents handle the rest.

Less management overhead, more focus on core tasks. Decision-making improves because data becomes accessible through natural language rather than complex BI tooling. But the three-branch principle still applies. Someone specifies. The model executes. Someone validates. Without all three, you have automation without accountability.

What Does This Mean for IT Specialists?

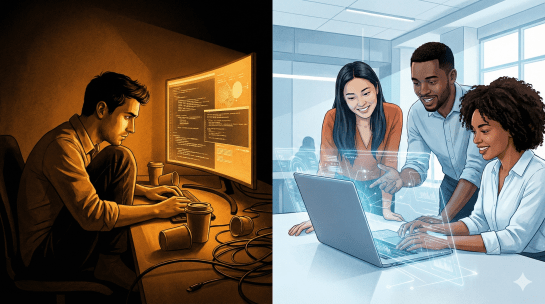

If you are a developer today, your value is shifting. Writing code from scratch becomes a smaller part of the job. Specifying what should be built, reviewing what was generated, and understanding why architectural decisions matter — this is where human professionals become irreplaceable.

The psychological impact should not be underestimated. Many engineers built their identity around the craft of writing code. Being told that a model can do this in seconds is unsettling. But the constitutional metaphor offers a reframe. You are not being replaced by the executive branch. You are being promoted to the legislature and the judiciary.

The learning pressure is significant. Writing specifications that frontier models can execute against, developing the critical eye to catch subtly wrong generated code, understanding frameworks like BMAD or Spec Kit — these skills must be learned on the job, now.

For technology leaders, the message is clear. Do not let your teams generate code without specification governance and quality review. Build the three branches into your SDLC. Treat specification as a first-class engineering activity. Invest in your people’s ability to think critically about machine-generated output. The models are capable. The tools exist. What is missing is the governance mindset. It is time to build it.

It depends… There is not true answer and time will tell. Based on various studies the productivity is real existing, but was consumed by the more complex integration of the digital world. Have the early digital assistants like electronic mail, the Internet and electronic calendar, u.o. led to more productivity of individuals. The recent adding of more and more decisions on „not experts“ led to less productivity at all. Was in the late 90’s an admin which organized many parts of the business, now more and more high paid employees are doing this by themselves. Of course there are a lot of supporting tools. However it is obvious that experts are much better handling the right processes, individuals can du this when more seldom are they do that.

It depends… There is not true answer and time will tell. Based on various studies the productivity is real existing, but was consumed by the more complex integration of the digital world. Have the early digital assistants like electronic mail, the Internet and electronic calendar, u.o. led to more productivity of individuals. The recent adding of more and more decisions on „not experts“ led to less productivity at all. Was in the late 90’s an admin which organized many parts of the business, now more and more high paid employees are doing this by themselves. Of course there are a lot of supporting tools. However it is obvious that experts are much better handling the right processes, individuals can du this when more seldom are they do that.